Exploiting the power of smart object recognition

‘iLIDS’ stands for ‘Interactive Locations and Intelligent Digital Signage’. It is part of the Department for Transport’s £9m Innovate UK ‘Accelerating Innovation in Rail’ programme under which Enable iD, The Rail Safety and Standards Board (RSSB), Arriva UK Trains and research partners the University of Surrey are researching, developing and testing new technology to improve information, safety and accessibility on railway station platforms.

Overview

The main motivation for the final project was the original iLIDS requirement to detect the presence of wheelchair users, bicycles, people with luggage/prams to alert staff of potential passengers who might have assistance needs as well as offering intelligent lighting or display solutions which would provide personalised information to these groups of passengers.

Another aspect was to carry out the processing of this information in as much a privacy-preserving manner as possible.

Approach

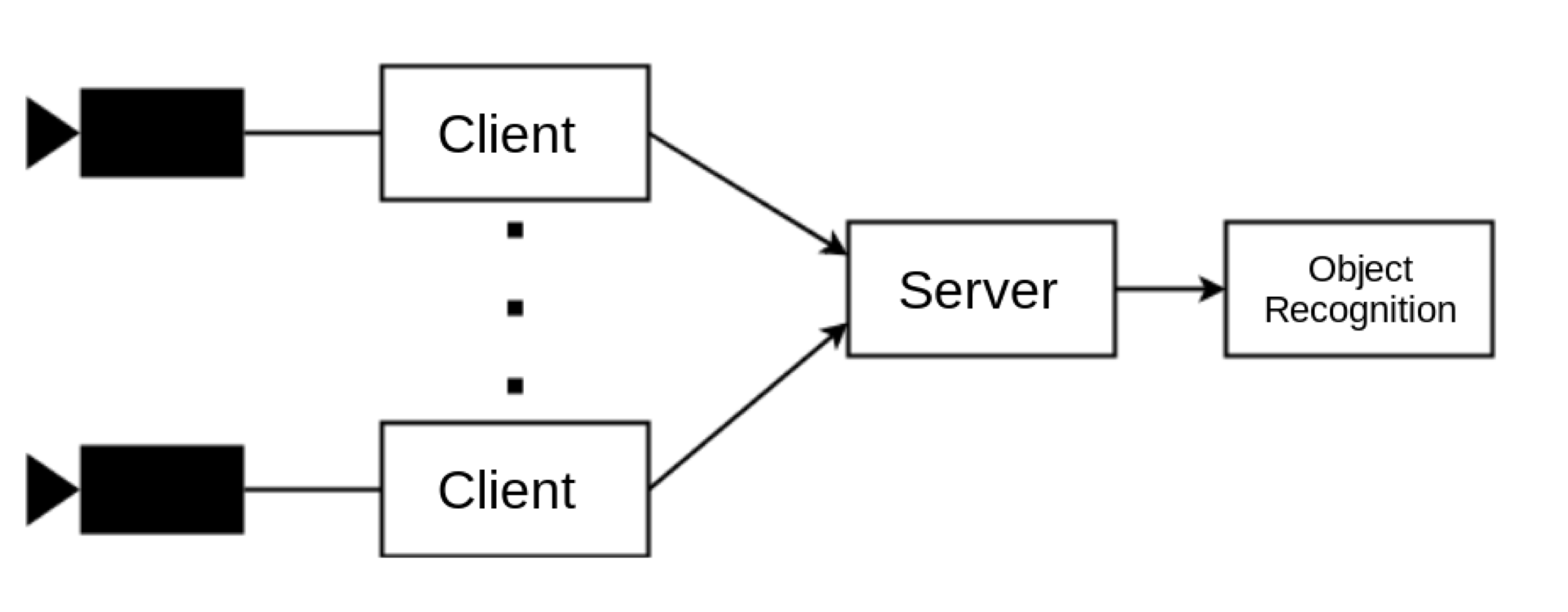

The first part of the project was to ensure that any data from the camera was sent over an encrypted channel. To limit the bandwidth required the receiver could control how much data was sent by the data feed, eg it could request just a single frame or the whole video stream. The client-server architecture of the image transfer application is shown in Figure 4.

Figure 4: Client-Server Architecture of the Video feed

Once the server has received the image, it is passed to the Object Recognition Application. The algorithm was developed to be run such that it could be run on a CPU-only server as well as take advantage of GPU acceleration if a supported graphics card was available.

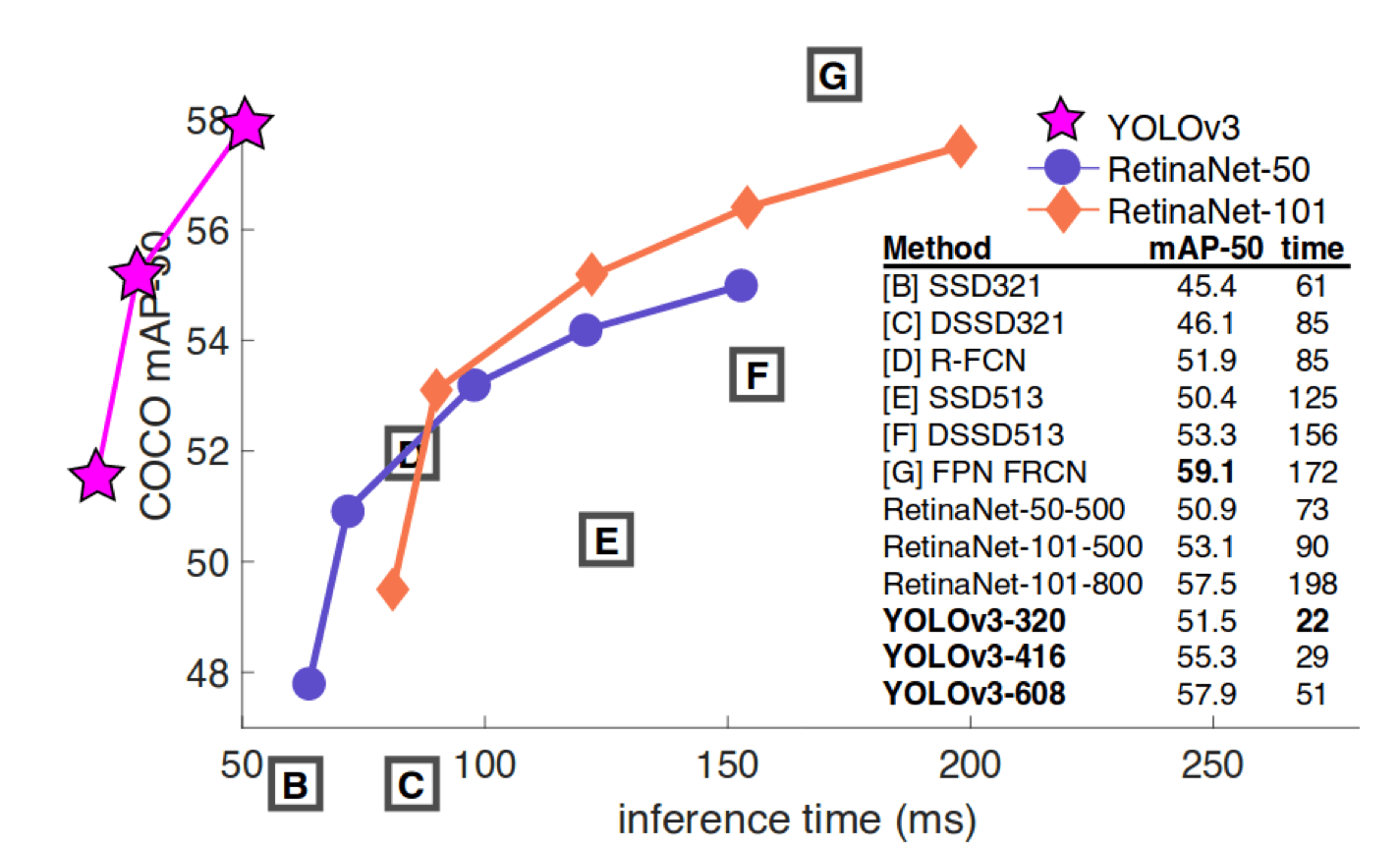

The object recognition algorithm used was YOLO v3 [3]which was chosen for its claimed speed and accuracy as shown in Figure 5

Figure 5: YOLOv3 performance (from [3])

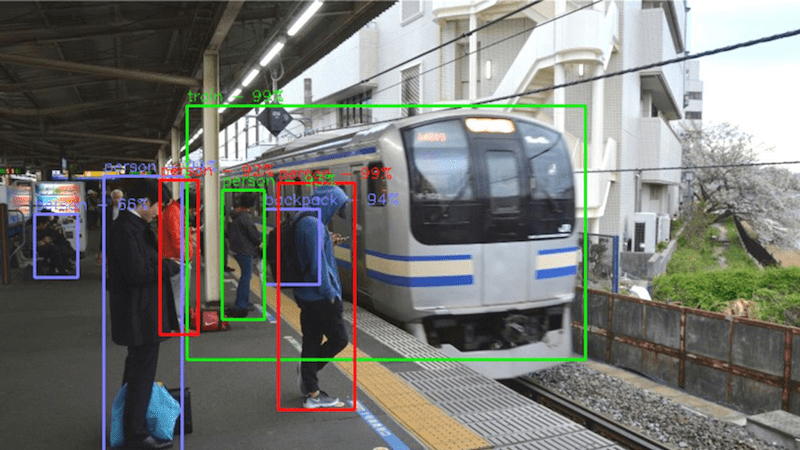

The application then reported the detected objects and associated confidence levels on a website to illustrate the output generated. The following image is an example output that the program generated:

Figure 6: Sample output of Object Recognition Algorithm

The final part of the project was to use Intel’s Software Guard Extensions (SGX) to protect the processing of the images on an untrusted host (eg a server provided by a cloud provider).SGX is a technology which allows programs to be executed in encrypted areas of memory (enclaves) to protect their data (and code) from the underlying operating system. It promises to provide a secure environment even if the underlying platform is untrusted.

Prototype Evaluation

The following table shows the performance (measured and interpolated) of the object detection algorithm (values with a question mark are estimations):

| CPU-ONLY (SINGLE THREAD) | CPU-ONLY (MULTIPLE THREADS) | CPU-WITH SGX ENABLED (SINGLE THREAD) | CPU WITH SGX AND MULTIPLE READING | GPU ACCELERATED | |

| Full version of YOLOv3 | 8000ms | 2400ms | 87500ms? | 26000ms? | 30ms |

| Tiny version of YOLOv3 | 750ms | 300ms | 8200ms | 3250ms? | 5ms |

Table 1: Performance of Object Recognition Algorithm (CPU i7-4790@4GHz, GPU: Nvidia GTX 980ti)

The tiny version of YOLOv3 is a simpler model which is less accurate but can be run in memory and processor-constrained environments like mobile phones. The reason why it was included was due to the current memory restrictions on SGX enclaves which limit them to 128Mb of memory but can use more using paging. The tiny version of YOLOv3 requires only 38Mb of data to initialise the model while the full version requires over 240Mb. The actual memory consumption is even higher so that even the tiny version of YOLOv3 required paging to run in the SGX enclave. Note that this explains the poor performance using SGX as the processor constantly needed to swap memory in and out of the enclave during the running of the program.

Nevertheless, the prototype proved that object recognition can be carried out on at reasonable speeds using the tiny version of YOLOv3 even on CPU only servers while a GPU accelerated version is several magnitudes faster.

For the problem of detecting specific objects or number of people on a platform, the algorithm only needs to process an image every few seconds as the objects in questions are likely to be reasonably stationary for a considerable amount of time. As such even the CPU the only version of YOLOv3 could be sufficient (especially the tiny version).

Conclusion and Future Work

The main outcome of this project was to demonstrate the feasibility of processing image data in a secure environment using Intel’s SGX and to establish a performance baseline. Apart from improving the performance, one interesting research direction is to investigate whether the security guarantees of the enclave withstand side-channel attacks, especially when memory needs to get constantly swapped in and out of an enclave as was the case with our set-up.

About – Surry Centre for Cybersecurity

Surry centre of cybersecurity is one of the 17 Academic Centres of Excellence in Cyber Security Research (ACEs-CSR) recognised by the UK National Cyber Security Centre (NCSC) in partnership with the Engineering and Physical Sciences Research Council (EPSRC).

Surry focuses on “Security by Design”, with particular expertise in trusted systems, privacy and authentication, secure communications, and multimedia security and forensics, with human dimensions in security as a cross-cutting theme. We have a strong ethos of applying theory into practice, and the application domains we work in include transportation (automotive, rail), democracy (e-voting), telecommunications, digital economy, and law enforcement.