Revealing your true colours with facial recognition

‘iLIDS’ stands for ‘Interactive Locations and Intelligent Digital Signage’. It is part of the Department for Transport’s £9m Innovate UK ‘Accelerating Innovation in Rail’ programme under which Enable iD, The Rail Safety and Standards Board (RSSB), Arriva UK Trains and research partners the University of Surrey are researching, developing and testing new technology to improve information, safety and accessibility on railway station platforms.

Overview

The original iLIDS project intended to provide personalised information to passengers on the platform/train. The way to achieve this level of personalisation could be achieved with a number of different technologies including Bluetooth beacon/WIFI sensors, which would detect a person’s mobile handset or supplied Bluetooth beacon, or by using biometrics like finger/palm print or facial recognition.

This summer project looked at the feasibility of using facial recognition to identify passengers so that relevant information could be supplied to them when standing in front of a “smart” display fitted with a webcam. Alternatively, this technology could be combined with a smart ticketing solution that provides additional information at the ticket gate (eg it states the platform the next appropriate train departs from)

Approach

The project main brief was to investigate how best to manage the privacy of users using facial recognition within a transport systems context. As part of the project, a prototype was developed that would use a local camera to send an encrypted video/image stream to a server which would carry out the actual facial recognition and return appropriate user details potentially including personalised information about their journey. To ensure the privacy of the users, the server-side computation should be carried out in such a way that the image data was ideally not accessible to the cloud platform on which the code was executed.

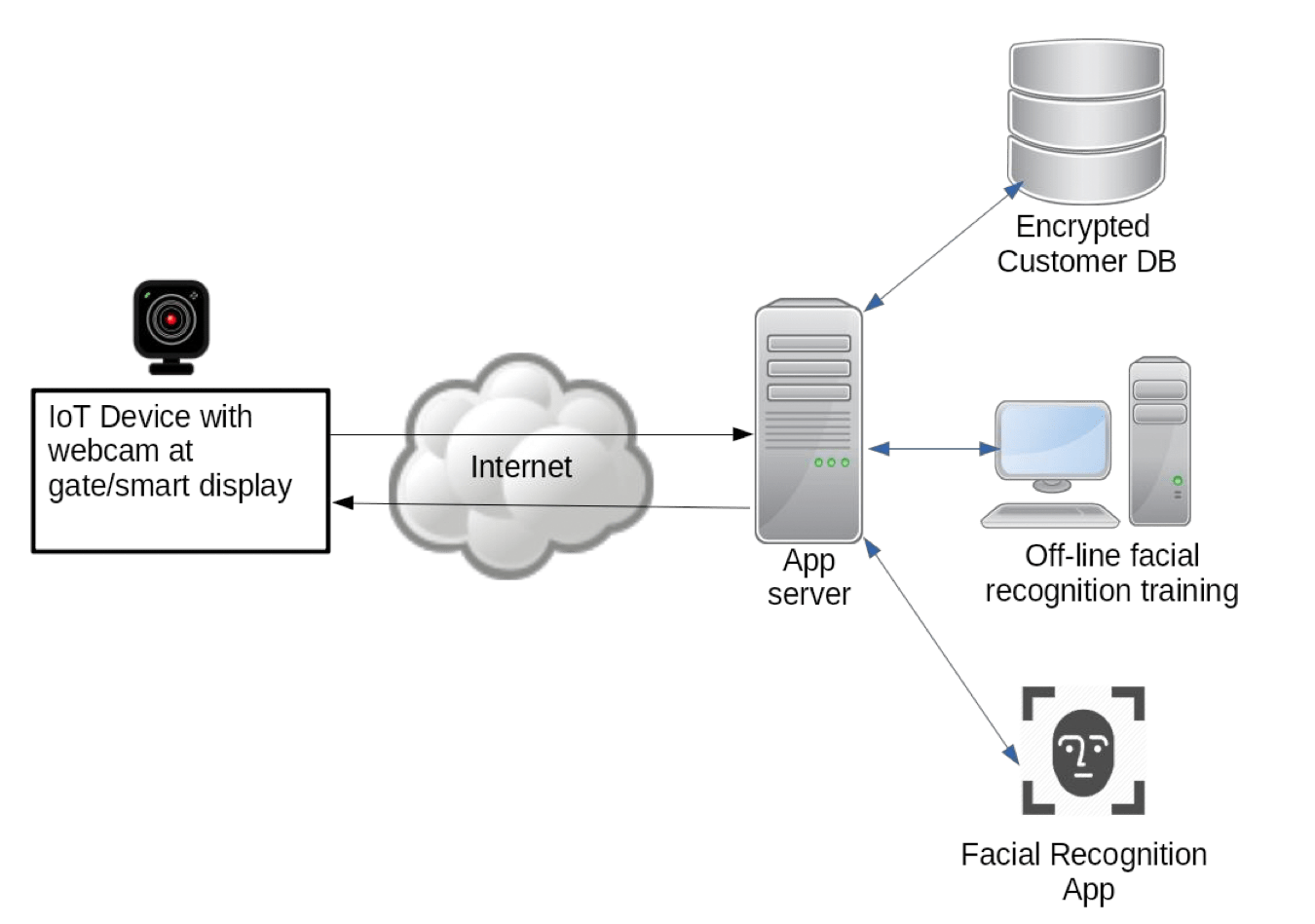

The architecture that was chosen is presented in Figure 7. In order to minimise the bandwidth requirements, the IoT device is assumed to be capable of running a simple face detection (different from recognition!) algorithm. For example, a Raspberry Pi 3B+ is capable of carrying out face detection in about 400ms while an Intel Core i5-6600 CPU @3.3GHz takes about 25-40ms. Note that the communication between the webcam and the server utilised the code developed as part of the object-recognition project.

Once a face has been detected, it is extracted from the image and sent via an encrypted channel over the internet to an application server hosted by a (cloud) service provider. This has been done to reduce the bandwidth requirements drastically. Instead of transmitting a video stream of say 25 frames of VGA (640×480 pixels) video, now only a few small images of roughly 200×200 pixels get transferred for further processing by the application server.

The application server then passes the image to the face recognition algorithm which in turn will return a label identifying the passenger together with a confidence score which can be used to look up the customer details in the encrypted customer database provided the confidence score is above a certain threshold, eg >85%.

Note that the facial recognition algorithm relies on a trained model which is loaded on start-up. Since the algorithm (MobileNetV2 [4] provided as part of the TensorFlow library [5]) used has a very small footprint, the amount of data stored is in the range of 200kb. No image data used to train the model is ever stored on the application server.

New faces are added to this model using an off-line server controlled by the train operator or the organisation responsible for running the service. This is a daily batch process that adds new passengers who have signed up to the service to the facial recognition model using Transfer Learning which uses the existing pre-trained model and retrains it to recognise the new faces instead of training it again from scratch. This has the advantage that training time is reduced from days to hours. The new model can then be uploaded to the cloud server in an encrypted format and the facial recognition app will reload the model during the night.

The customer database also is also encrypted when at rest and only decrypts its contents on start-up.

Consequently, all data (customer database and facial recognition model) is encrypted at rest and only held in unencrypted form in memory.

Image data is only ever held in memory and never persisted. Once the facial recognition algorithm has returned a label5.3.

Figure 7: Facial Recognition System Architecture

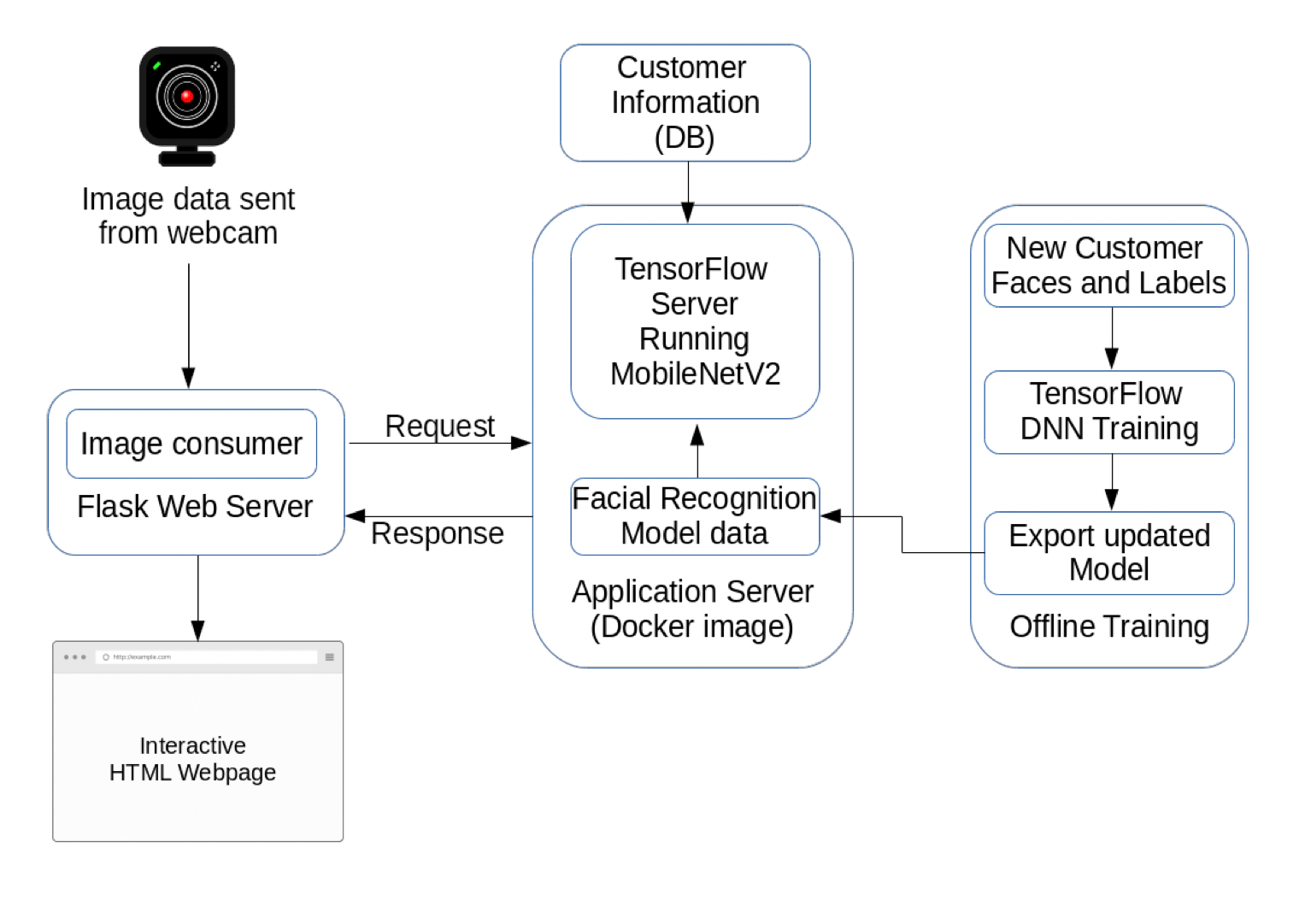

For the prototype, the application server also hosted a web application that displayed a simple web page which displayed the uploaded image and the corresponding data extracted for the label associated with the image. This application effectively represented the potential processing that could be done given the passenger information which could be as simple as just relaying the users season ticket details to open the gate or a detailed travel plan including train information for the next train for a passenger standing in front of a smart display.

The application server architecture is shown in Figure 8.

Figure 8: Application Server Design

Figure 8: Application Server Design

A docker image [6] was used to avoid the installation of a significant number of third-party libraries on the server. The Tensorflow docker image contains an implementation of the MobileNetV2 algorithm. For simplicity, the re-training of the model is done on the same machine but in a proper deployment, this would usually take place on a separate server which the train operating company would control to avoid storing any images of passengers on a cloud server.

Prototype Evaluation

The implementation faced a number of challenges. The initial approaches for facial recognition were based on Machine Learning approaches using key feature extraction algorithms including David Lowe’s SIFT [7], SURF [8], HoG [9] but their performance was less than satisfactory. One critical aspect was the lighting conditions under which the algorithms were able to perform. For the best performance, a well-lit subject is important but more work needs to be done to determine how the performance degrades given different levels of light.

Given these empirical results, it was decided to use a Deep Neural Network (DNN) approach in the form of the MobileNetV2 implementation within the TensorFlow project which performed much better but again more work needs to be done to establish the exact operational constraints under which the false positive (recognising someone as someone else) and false negative(not recognising someone when it should) rates detection rate are acceptably low. Note that from a revenue protection point a false positive is probably worse than a false negative. However, a false negative has a negative impact on the customer experience and might reduce trust in the system resulting in reputational damage.

Conclusion and Future Work

The facial recognition summer project showed that the performance of the face recognition algorithm was sufficiently fast for use with a smart display but probably needs more work to operate in a ticket gate scenario where throughput is critical.

The other outstanding piece of work is to look at moving the server-side into a trusted environment like Intel’s SGX enclaves to ensure that all sensitive information like images is only ever held in an encrypted format even in memory.

Summary

The 3 summer projects have looked at potential technologies and approaches to:

- provide functionality to the iLIDS project that got descoped during the initial requirements gathering phase as too ambitious for the timescales of the project (facial and object recognition)

- fill information gaps which are critical to the successful roll-of the iLIDS solution (providing train consist information)

The prototypes and their evaluation produced as part of these internships are intended to inform any corresponding future enhancements and their requirements to the iLIDS project going forward.

About – Surry Centre for Cybersecurity

Surry centre of cybersecurity is one of the 17 Academic Centres of Excellence in Cyber Security Research (ACEs-CSR) recognised by the UK National Cyber Security Centre (NCSC) in partnership with the Engineering and Physical Sciences Research Council (EPSRC).

Surry focuses on “Security by Design”, with particular expertise in trusted systems, privacy and authentication, secure communications, and multimedia security and forensics, with human dimensions in security as a cross-cutting theme. We have a strong ethos of applying theory into practice, and the application domains we work in include transportation (automotive, rail), democracy (e-voting), telecommunications, digital economy, and law enforcement.